How I host multiple websites on Linode with HAProxy

7.4.2021This website and a couple others all run on a single small Linode virtual server. Most sites are generated with the static site generator Sculpin, but some require PHP and other technologies.

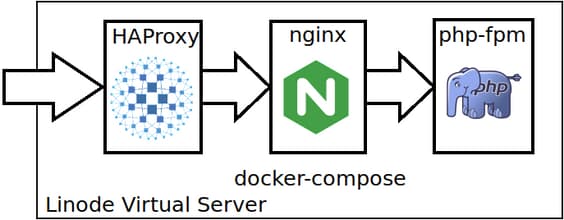

I wanted to keep the server relatively clean and be able to test my setup locally. Therefore i created a couple of docker containers and use docker-compose to run the whole system. All pages are served over https, using letsencrypt to get free SSL certificates. In this post I want to share about the tools I use.

HAProxy

HAProxy is a nice small server to handle incoming traffic and route it to the correct backend server. Using acl rules, we can decide which backend to go to, based on domain, path and so on.

You find examples for these things on the web. The thing I want to highlight is the integration with letsencrypt. I tried a couple of fancy docker-images that supposedly do everything with letsencrypt and whatnot. In the end I found a solution that i find quite simple: installing the certbot cli into the haproxy docker image. The relevant docker configuration is:

frontend http-in

bind *:80

bind *:443 ssl crt /etc/ssl/private/

http-response set-header Strict-Transport-Security max-age=36000

http-request set-header X-Forwarded-Port %[dst_port]

http-request add-header X-Forwarded-Proto https if { ssl_fc }

redirect scheme https code 301 if !{ path_beg /.well-known/acme-challenge/ } !{ ssl_fc }

acl letsencrypt-acl path_beg /.well-known/acme-challenge/

use_backend letsencrypt if letsencrypt-acl

default_backend nginx

backend nginx

retry-on all-retryable-errors

http-request disable-l7-retry if METH_POST

server nginx nginx:80 tfo

backend letsencrypt

server letsencrypt localhost:8080

The Dockerfile for the haproxy adds the letsencrypt certbot and copies the configuration and a bash script into the container:

FROM haproxy:2.1-alpine

RUN apk add bash socat certbot

COPY haproxy-certificate-refresh.sh /usr/local/bin/haproxy-certificate-refresh

RUN chmod +x /usr/local/bin/haproxy-certificate-refresh

COPY haproxy.cfg /usr/local/etc/haproxy/haproxy.cfg

Certificate handling

The additional bash script haproxy-certificate-refresh.sh in the Dockerfile above is used to update certificates without restarting HAProxy:

#!/bin/bash

set -e

LE_DIR=/etc/letsencrypt/live

HA_DIR=/etc/ssl/private

DOMAINS=$(ls ${LE_DIR})

# update certs for HA Proxy

for DOMAIN in ${DOMAINS}

do

if [ -d ${LE_DIR}/${DOMAIN} ]

then

cat ${LE_DIR}/${DOMAIN}/fullchain.pem ${LE_DIR}/${DOMAIN}/privkey.pem | tee ${HA_DIR}/${DOMAIN}.pem

echo -e "set ssl cert ${HA_DIR}/${DOMAIN}.pem <<\n$(cat ${HA_DIR}/${DOMAIN}.pem)\n" | socat stdio /var/run/haproxy

echo -e "commit ssl cert ${HA_DIR}/${DOMAIN}.pem" | socat stdio /var/run/haproxy

fi

done

To create a new certificate, I run certbot in the haproxy image:

docker-compose exec haproxy certbot certonly -d my-domain.org

docker-compose exec haproxy sh -c "cat /etc/letsencrypt/live/my-domain.org/fullchain.pem /etc/letsencrypt/live/my-domain.org/privkey.pem | tee /etc/ssl/private/my-domain.org.pem"

For this to work, DNS needs to be set up to point to the correct Linode.

(As usual, to add an additional subdomain into the same certificate, you can re-run the certbot command with additional subdomains in the -d parameter. Keep in mind that you need to repeat all subdomains and the main domain to add a subdomain.)

For certificate renewal, I set up a cronjob that executes from the root directory with the docker-compose file:

/usr/local/bin/docker-compose exec haproxy certbot -q renew && \

/usr/local/bin/docker-compose exec haproxy haproxy-certificate-refresh`

nginx

I don't do anything unusual with nginx. It runs behind the HAProxy, and therefore does not need to know about the SSL certificates. The communication between HAProxy, nginx and PHP-FPM runs inside docker and is not visible from the outside.

The Dockerfile for nginx is using nginx:stable and has one custom line COPY etc/nginx /etc/nginx to set up the configuration. It would probably make sense to mount the configuration directory instead of copying it into the image. Then i could do configuration changes and have nginx reload the configuration without rebuilding the image and avoiding all downtime.

I configure each site in a file unter etc/nginx/sites-enabled. I redirect either www. to the bare name, or the bare name to www., depending on which domain name I want to use. A static site is configured with the following (the root depends on the mount point in the docker-compose below):

server {

server_name davidbu.ch;

root /var/www/davidbu.ch;

include common.conf;

include images.conf;

}

server {

server_name www.davidbu.ch;

return 301 https://davidbu.ch$request_uri;

}

Sites that run a PHP application look a bit more complex (in this example a dokuwiki):

server {

server_name www.dynamic-website.xy;

root /var/www/dynamic-website.xy;

client_max_body_size 12M;

#Remember to comment the below out when you're installing, and uncomment it when done.

location ~ /(conf/|bin/|inc/|install.php) { deny all; }

location = / {

return 301 /dokuwiki/;

}

location ~ /dokuwiki/doku.php/ {

return 301 /dokuwiki/;

}

#Support for X-Accel-Redirect

location ~ ^/dokuwiki/data/ { internal ; }

location /dokuwiki { try_files $uri $uri/ @dokuwiki; }

location @dokuwiki {

# rewrites "doku.php/" out of the URLs if you set the userwrite setting to .htaccess in dokuwiki config page

rewrite ^/dokuwiki/_media/(.*) /dokuwiki/lib/exe/fetch.php?media=$1 last;

rewrite ^/dokuwiki/_detail/(.*) /dokuwiki/lib/exe/detail.php?media=$1 last;

rewrite ^/dokuwiki/_export/([^/]+)/(.*) /dokuwiki/doku.php?do=export_$1&id=$2 last;

rewrite ^/dokuwiki/(.*) /dokuwiki/doku.php?id=$1&$args last;

}

location ~ \.php$ {

try_files $uri $uri/ /doku.php /index.php;

include fastcgi_params;

fastcgi_param SCRIPT_FILENAME $document_root$fastcgi_script_name;

fastcgi_param SCRIPT_NAME $fastcgi_script_name;

fastcgi_pass dynamic-website:9000;

}

include common.conf;

index doku.php index.php index.html;

}

server {

server_name dynamic-website.xy;

return 301 http://www.dynamic-website.xy;

}

docker-compose

In the parent folder that contains the folders for HAProxy, nginx and PHP-FPM, i have a docker-compose.yml file. An excerpt from that file:

version: "3.5"

networks:

linode: ~

back:

driver: bridge

services:

haproxy:

restart: unless-stopped

build: haproxy/

ports:

- ${HTTP_PORT}:80

- ${HTTPS_PORT}:443

depends_on:

- nginx

networks:

- linode

- back

volumes:

- ./_data/haproxy/certs.d:/etc/ssl/private:rw

- ./_data/letsencrypt:/etc/letsencrypt:rw

nginx:

restart: unless-stopped

build: nginx/

depends_on:

- dynamic-website

networks:

- back

volumes:

- /home/david/www/davidbu.ch/htdocs:/var/www/davidbu.ch:ro

- /home/david/www/dynamic-website.xy/dokuwiki:/var/www/dynamic-website.xy/dokuwiki:ro

# more volumes for the various applications

dynamic-website:

restart: unless-stopped

build: php-fpm/

networks:

- back

volumes:

- /home/david/www/dynamic-website.xy/dokuwiki:/var/www/dynamic-website.xy/dokuwiki:rw

This configuration binds the HAProxy docker image to the ports 80 and 443 of the host system, so it is the default system answering HTTP and HTTPS requests.

Outlook

I generate my static websites with sculpin, which allows me to write Markdown or HTML. I wrote about that topic yesterday. I have a git repository for the sculpin project with the page source, and another git repository for the generated files. I set up sculpin to generate the files into that second repository, so that i can then commit and push changes to the output files and use git pull to update the page on the Linode server.

If you want to add new sites without a downtime, you would need to mount the nginx configuration, and also change from mounting a separate volume per website to mount a parent folder of the websites into the nginx and php-fpm containers so that you can add on the fly.

Anyways, I hope this braindump is useful to you. If you want to give linode a try, please use this link to give me some credit.